](/media/headers/shredder.jpg) Image credit: pixabay

Image credit: pixabay

sdelete - when secure delete fails

This is a local mirror of a blog written by me and originally published by NCC Group.

Note: there are now better ways of wiping modern SSDs, as many will be self encrypting or support ATA commands for secure erase.

Introduction

Securely erasing media is an important process for any IT department. There are numerous methods of ensuring that sensitive data is removed before items are reissued or disposed. And the removal of such data is also mandated by various standards such as ISO 27001, which states:

A.11.2.7 – “All items of equipment containing storage media shall be verified to ensure that any sensitive data and licenced software has been removed or securely overwritten prior to disposal or re-use”

Secure erasure requirements are imposed on Government contractors, private firms (for example under the Data Protection Act) and are relevant to anybody holding credit card data (under PCI DSS).

There are various ways to remove data from hard disks. Physical destruction (usually shredding) is obviously the most secure, though overwriting each sector or wiping free space allows reuse of media. It’s often a good idea to wipe drives before sending them to a third party for physical destruction or replacement, which is exactly what we were doing when we discovered an unusual bug in the Sysinternals SDelete utility.

In this blog post we’ll discuss the bug which can cause SDelete to fail in some circumstances even though it will report success. The Microsoft Security Response Centre (MSRC) were informed and determined this is not a vulnerability – therefore our research is presented to inform others of the potential risks of software based wiping solutions.

SDelete

SDelete is a Sysinternals utility for wiping files or free space. It is described as:

SDelete implements the Department of Defense clearing and sanitizing standard DOD 5220.22-M, to give you confidence that once deleted with SDelete, your file data is gone forever.

When wiping free space on a drive we noticed that SDelete will report success even if the process did not fully complete. This gives a false sense of assurance that data has been overwritten when it actually remains.

Our research suggests this bug occurs due to a lack of API error handling in the secure wipe function.

An overview of the bug

The process used by SDelete to wipe free space is well documented by Microsoft in the manual. First the largest non-cached file possible is created and filled with random data, or null bytes for the –z option. Afterward the largest cached file is allocated and securely deleted, ensuring that all sectors are overwritten (it’s possible that some small amount of data would be left after the first file because of sector alignment).

However - wiping will silently fail if the device disappears or fails to communicate quickly enough during wiping, for example:

- the device is forcibly disconnected from the machine.

- there are excessive I/O errors or timeouts.

Any other event which causes the drive to disappear could potentially trigger the bug, for example if a device does not enumerate quickly enough after hibernation, though this scenario was not tested.

We discovered this when wiping a faulty SSD which occasionally locks up and resets. This was a known problem with the drive, communication usually restored itself within a few seconds and errors were logged by the operating system. However – the drive was failing and needed to be wiped before sending to disposal.

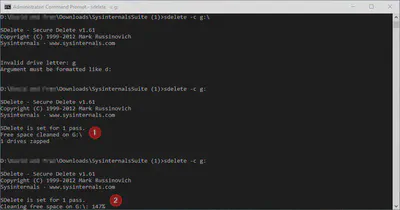

The reset happened around 40% through the wipe process at which point SDelete immediately terminated, but still showed:

SDelete is set for 1 pass.

Free space cleaned on G:

1 drives zapped

Because we were watching the wipe process this was noticed and investigated further. The tool is telling us that free space has been cleaned but it was obvious from the progress indicator and time elapsed that 60GB hadn’t been fully wiped.

Note that SDelete displays a percentage progress indicator but this disappears when wiping “finishes”, so it’s impossible to tell that only 40% was wiped.

During further testing one drive got to 114% free space wiped (see screenshot below) so we are unsure how reliable this metric is. It may be possible for total percentage to exceed 100% if additional free space becomes available during the wipe process but this was not possible in our test scenario because a new partition had been created which was not in use for any other files.

At marker “1” above the disk was physically removed halfway through wiping but the message still shows success. At marker “2” the free space progress is at 147% for an unknown reason (perhaps relating to the previous unsuccessful wipe).

The fundamental problem is that SDelete displays a message indicating that a drive was “zapped” and says free space cleaned when it was not.

No errors were displayed throughout the process and therefore SDelete can fail silently with no notification to the user, potentially leaving unwiped data on a partition.

Replicating the bug

This bug can be replicated with a USB3 to SATA dock and any hard drive. Removing the hard drive part way through the wipe will display the “success” message above. Obviously data cannot have been completely sanitised at this point.

The bug can also be triggered with a USB flash drive but a messagebox will be displayed which may alert the user that something is wrong. The message reads:

The wrong volume is in the drive. Please insert volume KINGSTON into drive G: Cancel / Try Again / Continue

Clicking any of the three buttons terminates SDelete and the “success” message is shown.

A malicious program could monitor for the activity conducted by SDelete and send IOCTL_INTERNAL_USB_CYCLE_PORT or reset the disk using the Storage Management API, though these scenarios are hypothetical and unlikely to manifest themselves in real life. It’s far more likely that an old and failing disk gives up part way through wiping.

Identifying the root cause

For testing the latest version SDelete v1.61 was used (MD5: e189b5ce11618bb7880e9b09d53a588f).

Debugging the program to identify the root cause we named the following two functions:

sub_402c90-zap_drive()sub_401b90-fill_file()

zap_drive contains a number of checks, the first is whether the provided drive is a UNC path. If the drive is not a UNC path then a global variable dword_425FEC is incremented, named dwDrivesZapped by us. This is important later.

After more tests including obtaining the disk cluster size, checking free space and checking disk quotas the function fill_file is called multiple times to write random data to disk.

fill_file uses the SetFilePointer and WriteFile APIs to write random data to disk. The return codes from these APIs are checked but GetLastError is never called. When writes fail there seems to be an assumption that the cause is not a problem and the program resumes. API failure may actually be (ab)used as a design feature in this function. When fill_file fails due to an API error it returns to zap_drive which in turn eventually returns to _wmain.

However disk writes can fail for other reasons which are not part of the design such as I/O error. These need handling separately and should not simply “fall through” to the next part of the code path.

There’s also a VirtualAlloc in fill_file which could trigger a similar failure and return to _wmain. The return code from VirtualAlloc is checked but if allocation fails then all file writing code is skipped. Inability to allocate memory should usually be treated as a fatal error, terminating the program immediately and notifying the user if possible.

At the end of _wmain (at loc_40393a) the previously set global dword_425FEC (dwDrivesZapped) is checked. This is passed directly to wprintf with the format string %ld drives zapped. Therefore so long as the UNC check passes the user will be shown a message stating that disk wiping was successful, regardless of whether other checks failed and irrespective of any data being written.

Potential fixes

When submitting this bug to MSRC we suggested a number of fixes, all would partly alleviate the problems associated with software data deletion utilities.

These are (in ascending order of preference):

- Do not hide wipe status after wiping completes. This will allow the user to judge whether wiping reached 100%.

- For each drive, check that total percentage >=100% and display an error if not.

- Check that total written data equals (or exceeds) the free space calculated at the beginning, using the return of

dwBytesWrittenfromWriteFile(note this could be calculated in units of sector for large modern hard disks). - Handle all potential API errors correctly, calling

GetLastErrorand aborting with an obvious fatal error for any unrecoverable I/O errors.

Conclusion

Claims made by vendor tools should be tested thoroughly before they are used as part of an internal secure erase process. In many cases the user will be reliant on certification by the vendor or an independent testing house – case should be taken to ensure this is well understood.

It is often easier to rely on a physical destruction process, shredding the disk into pieces. Organisations should check service contracts or warranties to ensure that faulty disks don’t have to be returned to the manufacturer to receive replacements (this is often an extra option with additional costs).

Unfortunately at present obtaining confidence from SDelete requires watching progress until 100% and checking the drive afterward. We hope that Microsoft will release an updated version of the utility to fix the bug and will update this post if that happens.

Update (July 2016)

In July 2016 a new version of SDelete (v2.0) was released by Microsoft. Much of the code referred to in this post appears to have been rewritten, however there is no further information on the Sysinternals blog and no changelog has been released.